Mạng tích chập neural network

Tìm hiểu về kiến trúc và cách cài đặt mạng tích chập neural

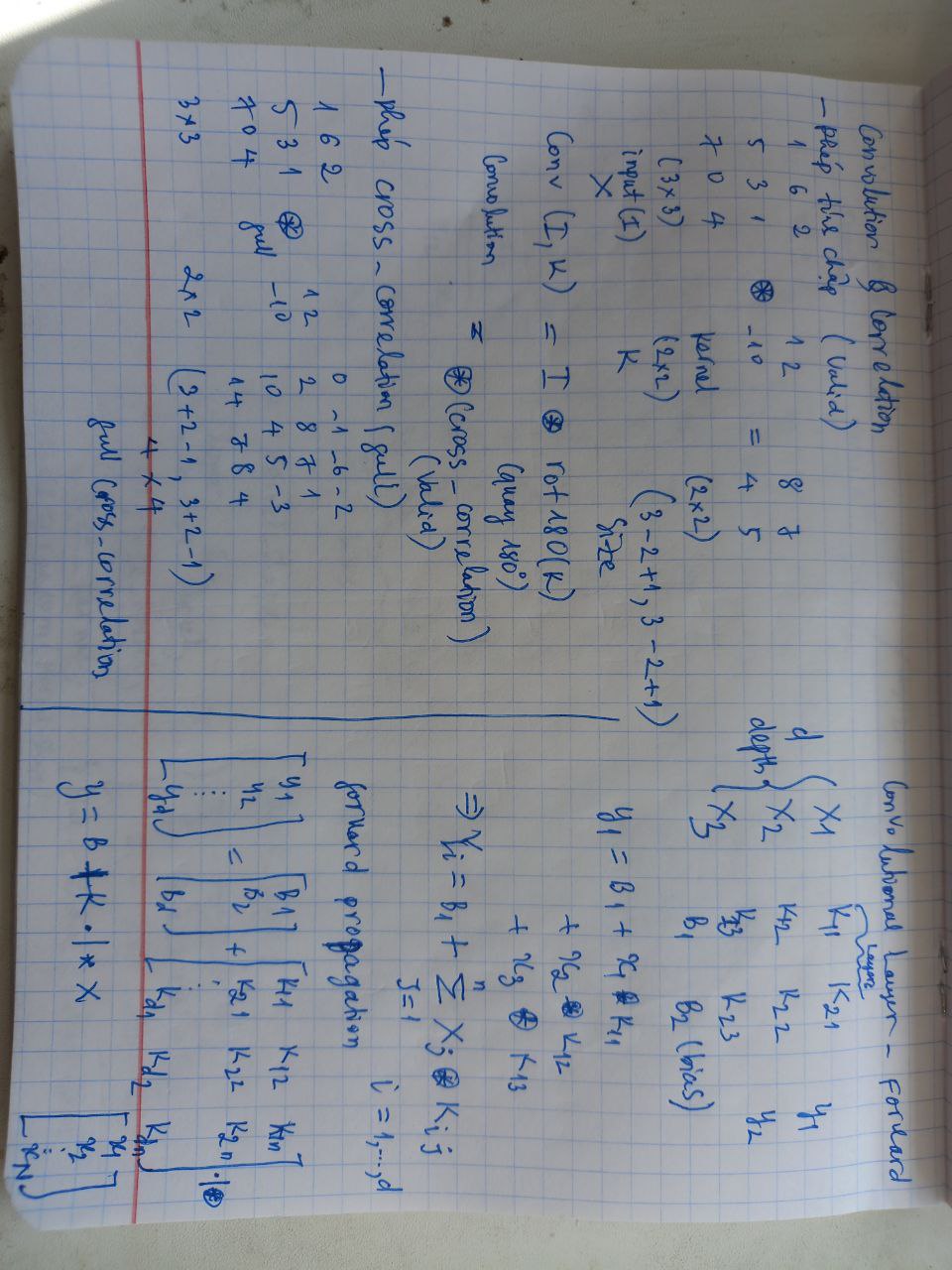

Convolution & Correlation

Giới thiệu phép tích chập và phép correlation

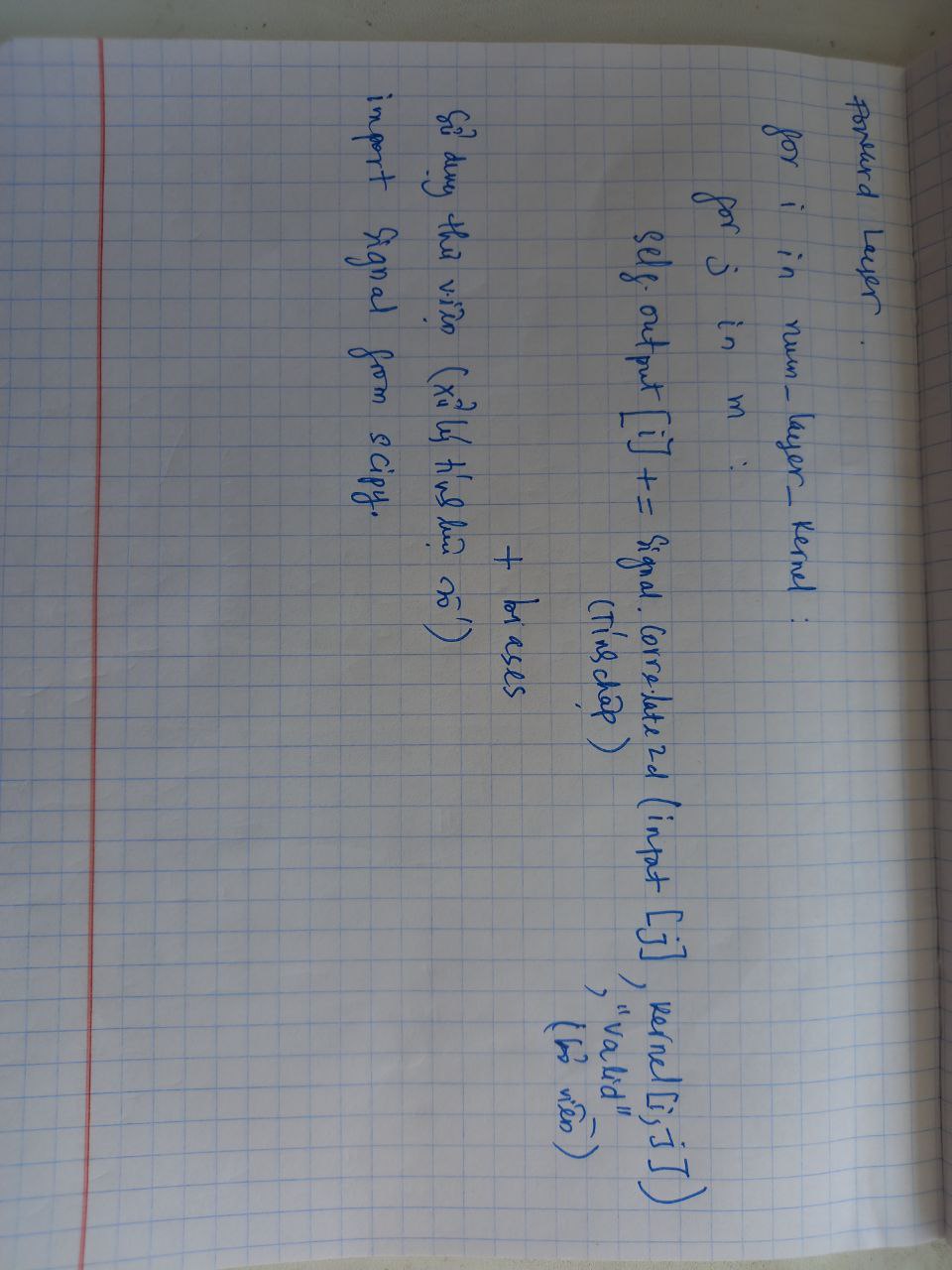

Convolution Layer - Forward

Giới thiệu công thức tính forward

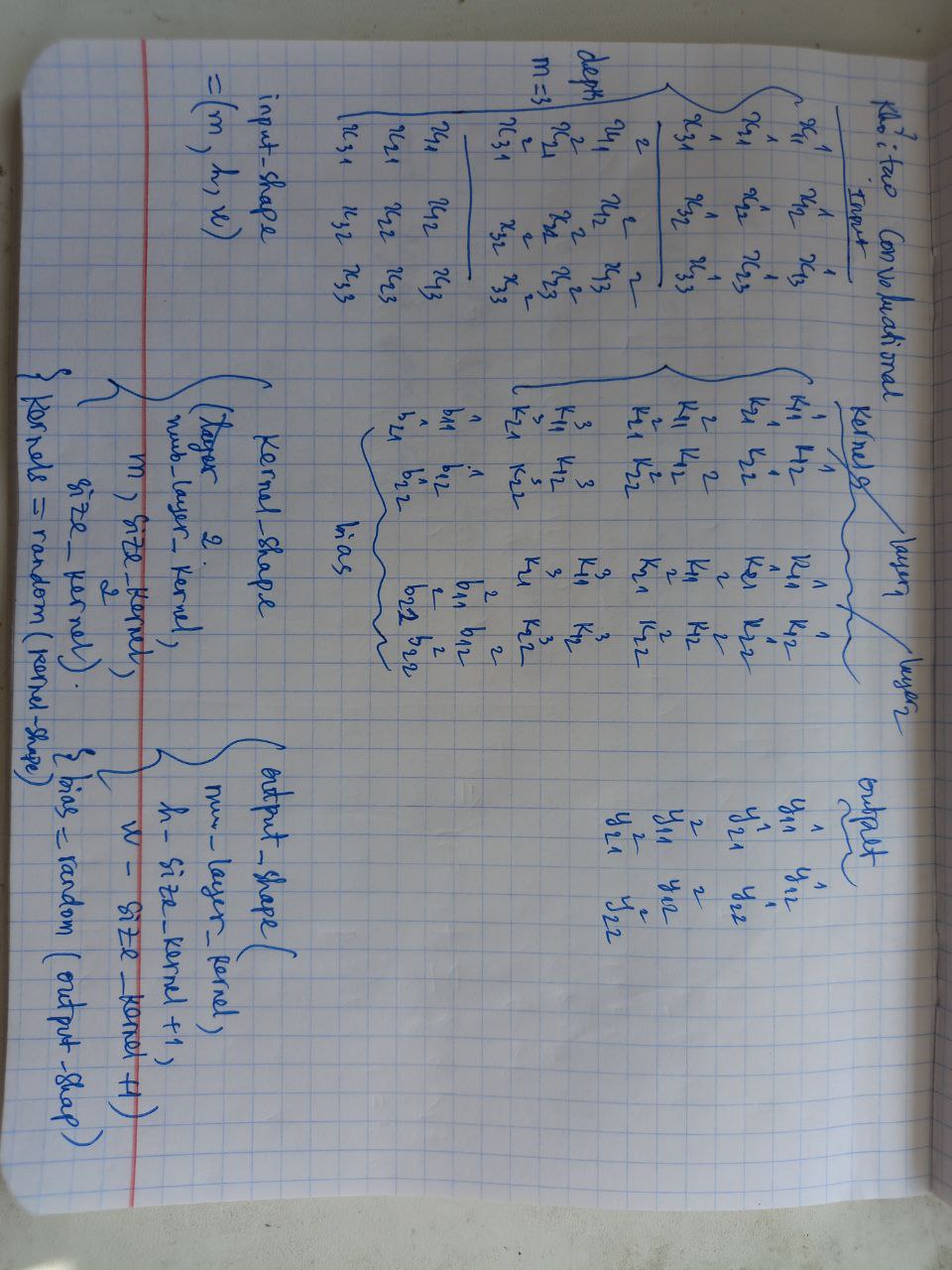

Khởi tạo Convolution

Giải thích và cài đặt

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

import numpy as np

from scipy import signal

from layer import Layer

class Convolutional(Layer):

# Tinh forward va backward cho lop Convolutional

def __init__(self, input_shape, kernel_size, depth):

input_depth, input_height, input_width = input_shape # (inputshape (m, h, w)

# input_depth: so luong input dau vao (m: size)

# depth: nume_layer_kernel

self.depth = depth

self.input_shape = input_shape

self.input_depth = input_depth

self.output_shape = (depth, input_height - kernel_size + 1, input_width - kernel_size + 1)

self.kernels_shape = (depth, input_depth, kernel_size, kernel_size)

#output_shape (num_layer_kernel, h - size_kernel + 1, w - size_kernel + 1)

#kernel_shape (num_layer_kernel, m, kernel_size, kernel_size)

#kernels (dua tren kernels_shape

self.kernels = np.random.randn(*self.kernels_shape)

#biases (dua tren size output_shape)

self.biases = np.random.randn(*self.output_shape)

def forward(self, input): # input * kernel + bias

# duyet tu num_layer_kernel, duyet cac input dulieu

# tinh tung output[i] (tu dau den cuoi)

self.input = input

self.output = np.copy(self.biases)

for i in range(self.depth):

for j in range(self.input_depth):

self.output[i] += signal.correlate2d(self.input[j], self.kernels[i, j], "valid")

return self.output

# dau vao: output_gradient, learning

# cach tinh da duoc giai thich o cac hinh (back_ward1,2,3,4)

def backward(self, output_gradient, learning_rate):

kernels_gradient = np.zeros(self.kernels_shape)

input_gradient = np.zeros(self.input_shape)

for i in range(self.depth):

for j in range(self.input_depth):

kernels_gradient[i, j] = signal.correlate2d(self.input[j], output_gradient[i], "valid")

input_gradient[j] += signal.convolve2d(output_gradient[i], self.kernels[i, j], "full")

self.kernels -= learning_rate * kernels_gradient

self.biases -= learning_rate * output_gradient

return input_gradient

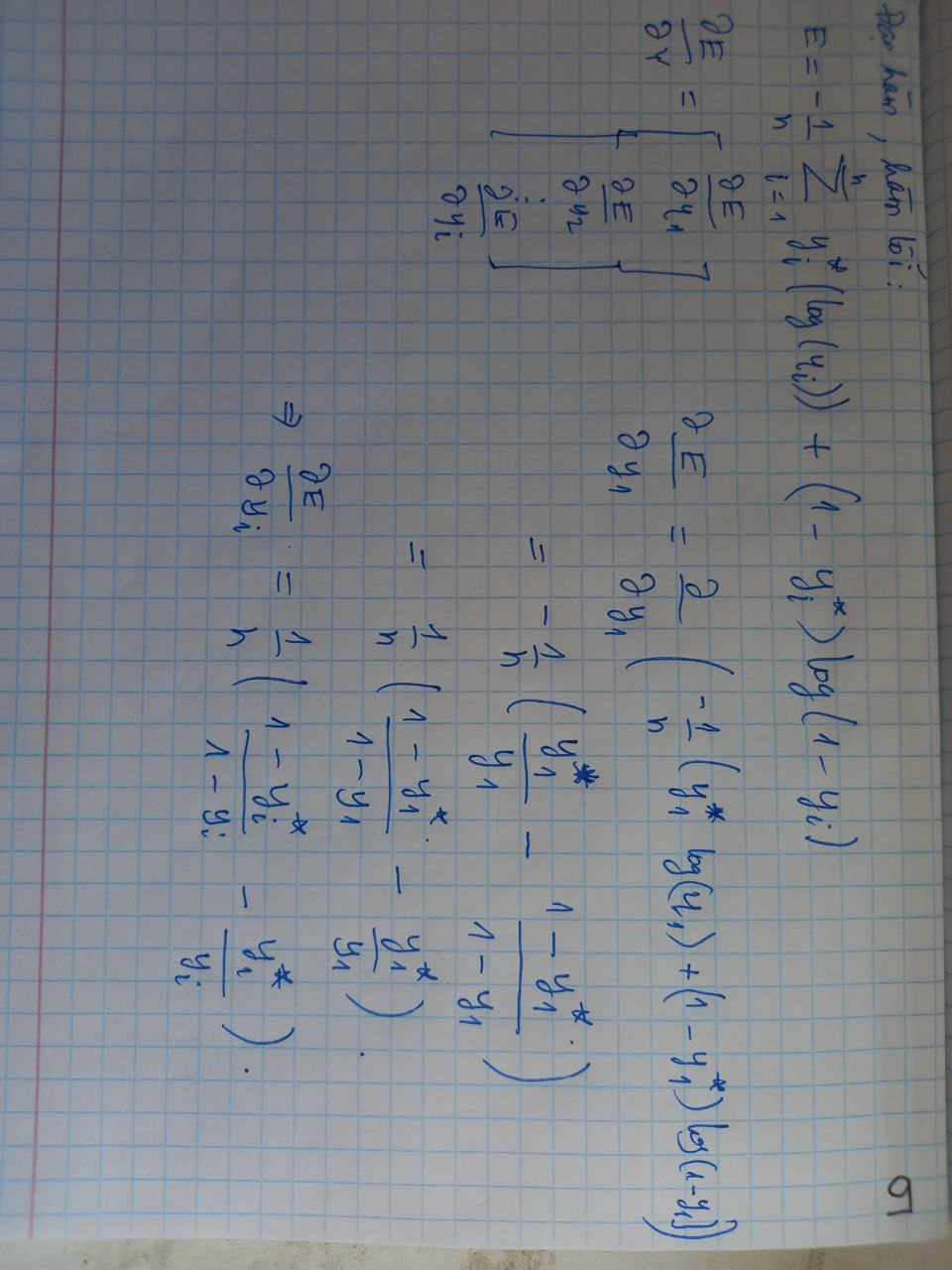

Hàm lỗi và đạo hàm

Cách tính hàm lỗi và đạo hàm

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

import numpy as np

# Do loi mse (binh phuong do lech)

def mse(y_true, y_pred):

return np.mean(np.power(y_true - y_pred, 2))

# Dao ham cua mse giua (y_true vs y_pred)

def mse_prime(y_true, y_pred):

return 2 * (y_pred - y_true) / np.size(y_true)

# Gia tri cua ham loi giua y_true vs y_pred (predict)

def binary_cross_entropy(y_true, y_pred):

return np.mean(-y_true * np.log(y_pred) - (1 - y_true) * np.log(1 - y_pred))

# Dao ham cua ham loi giua y_true va y_predict

def binary_cross_entropy_prime(y_true, y_pred):

return ((1 - y_true) / (1 - y_pred) - y_true / y_pred) / np.size(y_true)

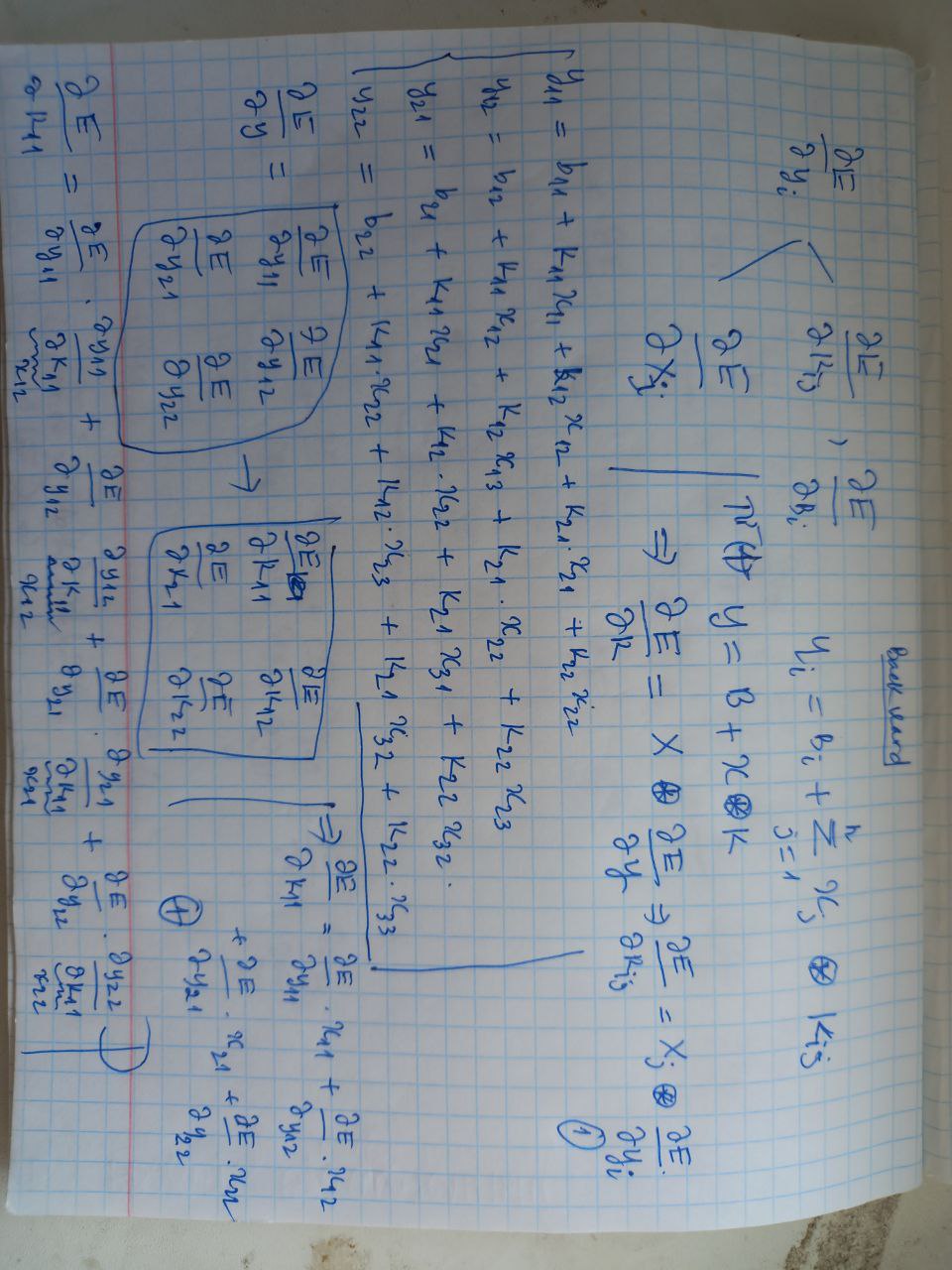

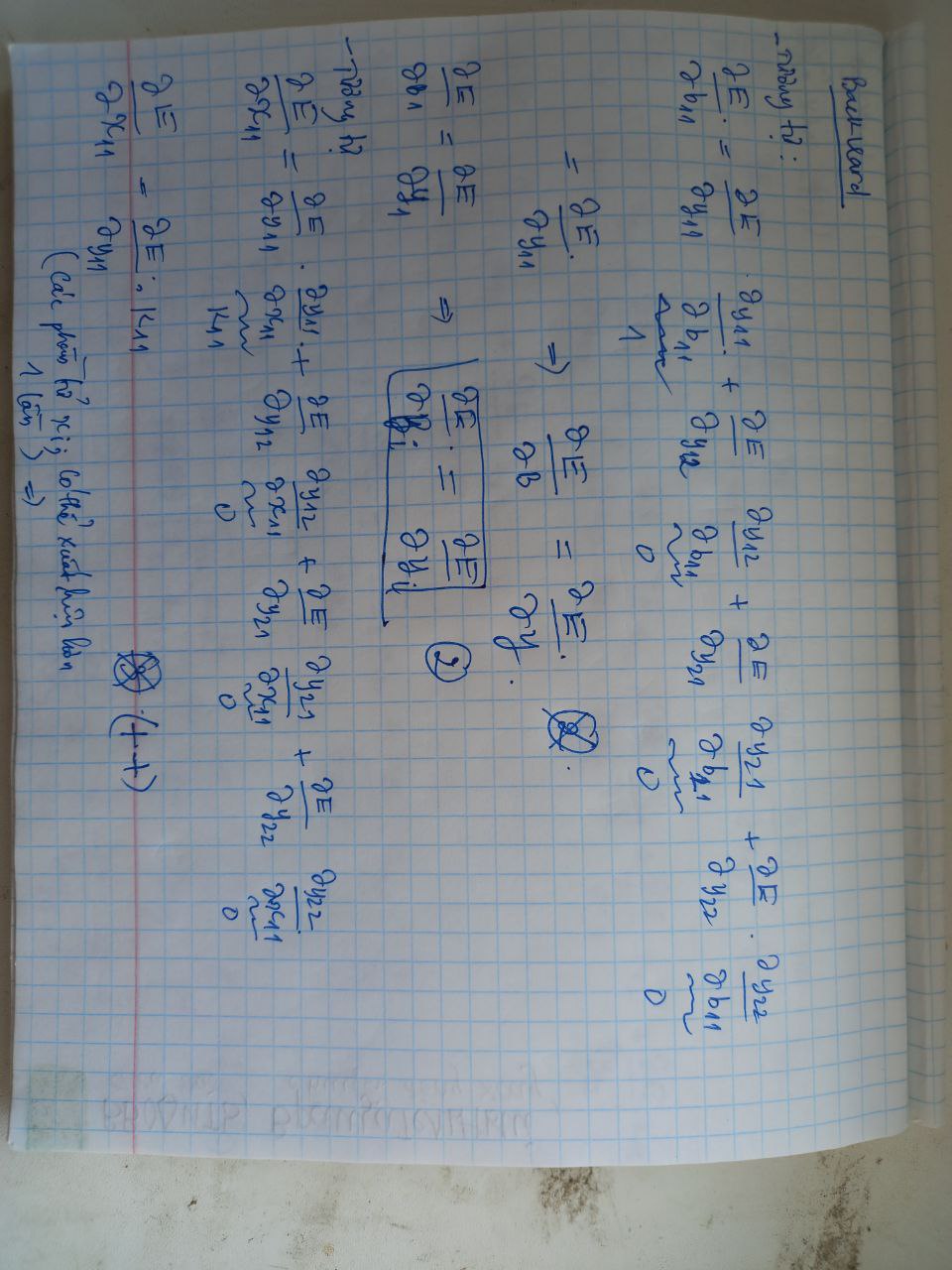

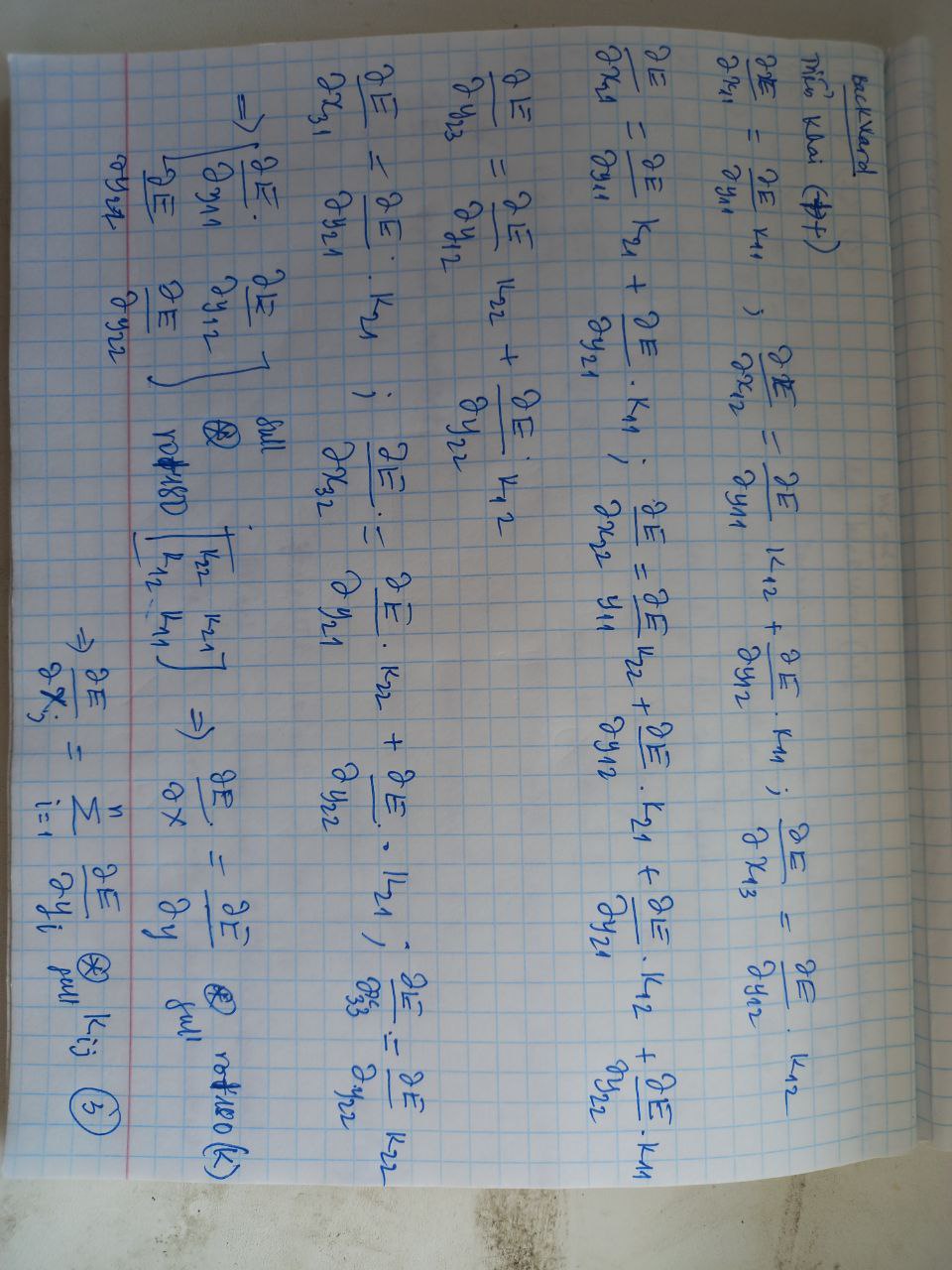

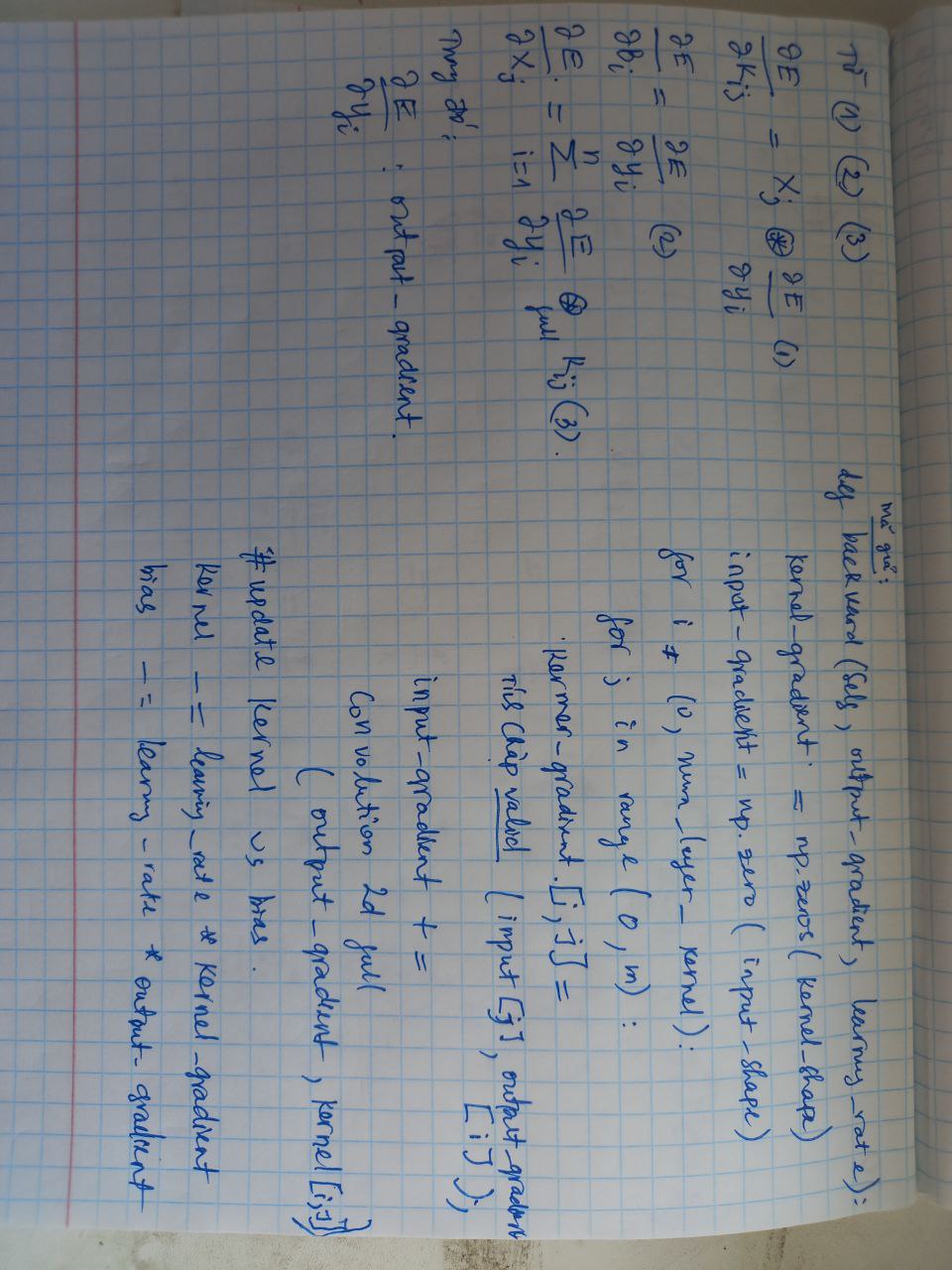

Phương pháp backward (đối với convolution)

toán học và cài đặt

1

2

3

4

5

6

7

8

9

10

11

12

def backward(self, output_gradient, learning_rate):

kernels_gradient = np.zeros(self.kernels_shape)

input_gradient = np.zeros(self.input_shape)

for i in range(self.depth):

for j in range(self.input_depth):

kernels_gradient[i, j] = signal.correlate2d(self.input[j], output_gradient[i], "valid")

input_gradient[j] += signal.convolve2d(output_gradient[i], self.kernels[i, j], "full")

self.kernels -= learning_rate * kernels_gradient

self.biases -= learning_rate * output_gradient

return input_gradient

Cài đặt đầy đủ CNN (cài từ đầu)

Class Layer (base)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

# Lop base (Layer) gom co input, output

# method forward (input) --> "dao ham tien"

# method backward (output_gradient, learning rate) --> "dao ham nguoc"

# Cac lop ke thua lop (Layer) phai dinh nghia lai forward va backward

class Layer:

def __init__(self):

self.input = None

self.output = None

def forward(self, input):

# TODO: return output

pass

def backward(self, output_gradient, learning_rate):

# TODO: update parameters and return input gradient

pass

Hàm Activations

Cài đặt các hàm activation

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

import numpy as np

from layer import Layer

# Lop Activation (ke thua Layer)

# Activation (actionvation function, activation prime - dao ham)

class Activation(Layer):

def __init__(self, activation, activation_prime):

self.activation = activation #ham activation

self.activation_prime = activation_prime # dao ham cua ham activation tuong ung.

# Khi forward no se call ham activation cho input dua vao

def forward(self, input):

self.input = input

return self.activation(self.input)

# Khi backward no thuc hien phep mul giua output_gradient vs activation_prime cua input

def backward(self, output_gradient, learning_rate):

return np.multiply(output_gradient, self.activation_prime(self.input))

Cài đặt 2 hàm actionvation: Tanh, Sigmoid

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

import numpy as np

from layer import Layer

from activation import Activation

# Ham Tanh va sigmoid (2 ham nay la ham activation)

# Ham Tanh (ke thua Lop Activation)

# Dinh nghia ham Tanh va dao ham cua ham Tanh tuong ung

class Tanh(Activation):

def __init__(self):

def tanh(x):

return np.tanh(x)

def tanh_prime(x):

return 1 - np.tanh(x) ** 2

super().__init__(tanh, tanh_prime)

# Dinh nghia ham sigmoid va dao ham cua ham sigmoid

class Sigmoid(Activation):

def __init__(self):

def sigmoid(x):

return 1 / (1 + np.exp(-x))

def sigmoid_prime(x):

s = sigmoid(x)

return s * (1 - s)

super().__init__(sigmoid, sigmoid_prime)

# Lop softmax ke thua tu lop (Layer)

# Dinh nghia lai ham forward vs backward

# Softmax la mot Layer (no can duoc dinh nghia forward va backward)

class Softmax(Layer):

# forward: x^e / sum (x^e)

def forward(self, input):

tmp = np.exp(input)

self.output = tmp / np.sum(tmp)

return self.output

# Day la cong thuc tinh backward theo softmax

def backward(self, output_gradient, learning_rate):

# This version is faster than the one presented in the video

n = np.size(self.output)

return np.dot((np.identity(n) - self.output.T) * self.output, output_gradient)

# Original formula:

# tmp = np.tile(self.output, n)

# return np.dot(tmp * (np.identity(n) - np.transpose(tmp)), output_gradient)

Util Reshape.py

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

import numpy as np

from layer import Layer

# Reshape (no cung la mot Layer), can dinh nghia la forward vs backward

class Reshape(Layer):

# input cua Reshape la (input_shape, output_shape)

def __init__(self, input_shape, output_shape):

self.input_shape = input_shape

self.output_shape = output_shape

# qua trinh forward (input) --> reshape input theo (output_shape)

def forward(self, input):

return np.reshape(input, self.output_shape)

# qua trinh backward --> no reshape output_gradient theo dang (input_shape)

def backward(self, output_gradient, learning_rate):

return np.reshape(output_gradient, self.input_shape)

Lớp Dense

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

import numpy as np

from layer import Layer

# Lop Dense cung la mot Layer

class Dense(Layer):

# ham khoi tao (input_sie, vs output_size)

# --> sinh ra weights (output_size, input_size)

# --> bias (output_size, 1)

def __init__(self, input_size, output_size):

self.weights = np.random.randn(output_size, input_size)

self.bias = np.random.randn(output_size, 1)

# forward: tinh tich "dot" cua weights vs input + bias

def forward(self, input):

self.input = input

return np.dot(self.weights, self.input) + self.bias

# backward: tim lai input_gradient

# du lieu dau vao (output_gradient, learning_rate)

# cap nhat weigths vs bias (nguoc dau voi dao ham)

def backward(self, output_gradient, learning_rate):

# gradient cua weigths chinh la tich "dot" output_gradient vs intput.T

weights_gradient = np.dot(output_gradient, self.input.T)

# input_gradient chinh la tich "dot" cua weights.T vs output_gradient

input_gradient = np.dot(self.weights.T, output_gradient)

# cap nhat weights vs bias

self.weights -= learning_rate * weights_gradient

self.bias -= learning_rate * output_gradient

return input_gradient

Cài đặt network

Cài đặt phương thức train và predict

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

def predict(network, input):

# input: dau vao de predict

# network: mang da co.

output = input

# duyet qua tung layer --> tinh forward den cuoi cung

# --> output tuong ung voi input dau vao.

for layer in network:

output = layer.forward(output)

return output

def train(network, loss, loss_prime, x_train, y_train, epochs = 1000, learning_rate = 0.01, verbose = True):

# duyet tu epochs

for e in range(epochs):

error = 0

# moi epochs tinh lai error

# duyet toan bo tap train

for x, y in zip(x_train, y_train):

# forward

output = predict(network, x)

# error

error += loss(y, output)

# backward

grad = loss_prime(y, output)

# duyet nguoc netork, tinh backward voi gradient_output (tinh theo ham loss vs loss_prime dua vao)

for layer in reversed(network):

grad = layer.backward(grad, learning_rate)

error /= len(x_train)

if verbose:

print(f"{e + 1}/{epochs}, error={error}")

Hàm Chính

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '3'

import numpy as np

from keras.datasets import mnist

from keras.utils import np_utils

from dense import Dense

from convolutional import Convolutional

from reshape import Reshape

from activations import Sigmoid

from losses import binary_cross_entropy, binary_cross_entropy_prime

from network import train, predict

def preprocess_data(x, y, limit):

zero_index = np.where(y == 0)[0][:limit]

one_index = np.where(y == 1)[0][:limit]

all_indices = np.hstack((zero_index, one_index))

all_indices = np.random.permutation(all_indices)

x, y = x[all_indices], y[all_indices]

x = x.reshape(len(x), 1, 28, 28)

x = x.astype("float32") / 255

y = np_utils.to_categorical(y)

y = y.reshape(len(y), 2, 1)

return x, y

# load MNIST from server, limit to 100 images per class since we're not training on GPU

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, y_train = preprocess_data(x_train, y_train, 100)

x_test, y_test = preprocess_data(x_test, y_test, 100)

# neural network

network = [

Convolutional((1, 28, 28), 3, 5),

Sigmoid(),

Reshape((5, 26, 26), (5 * 26 * 26, 1)),

Dense(5 * 26 * 26, 100),

Sigmoid(),

Dense(100, 2),

Sigmoid()

]

# train

train(

network,

binary_cross_entropy,

binary_cross_entropy_prime,

x_train,

y_train,

epochs=20,

learning_rate=0.1

)

# test

for x, y in zip(x_test, y_test):

output = predict(network, x)

print(f"pred: {np.argmax(output)}, true: {np.argmax(y)}")

Link tham khảo

Tài liệu tham khảo

Machine learning cơ bản

Hết.